Mirascope and Langfuse Integration

Langfuse Integration

We’re thrilled to announce our integration with the LLM Engineering Platform Langfuse. LLM Development without any observability tool is a dangerous endeavor, so we at Mirascope have made it easy for you to slot in Langfuse into your workflow.

Mirascope and Langfuse

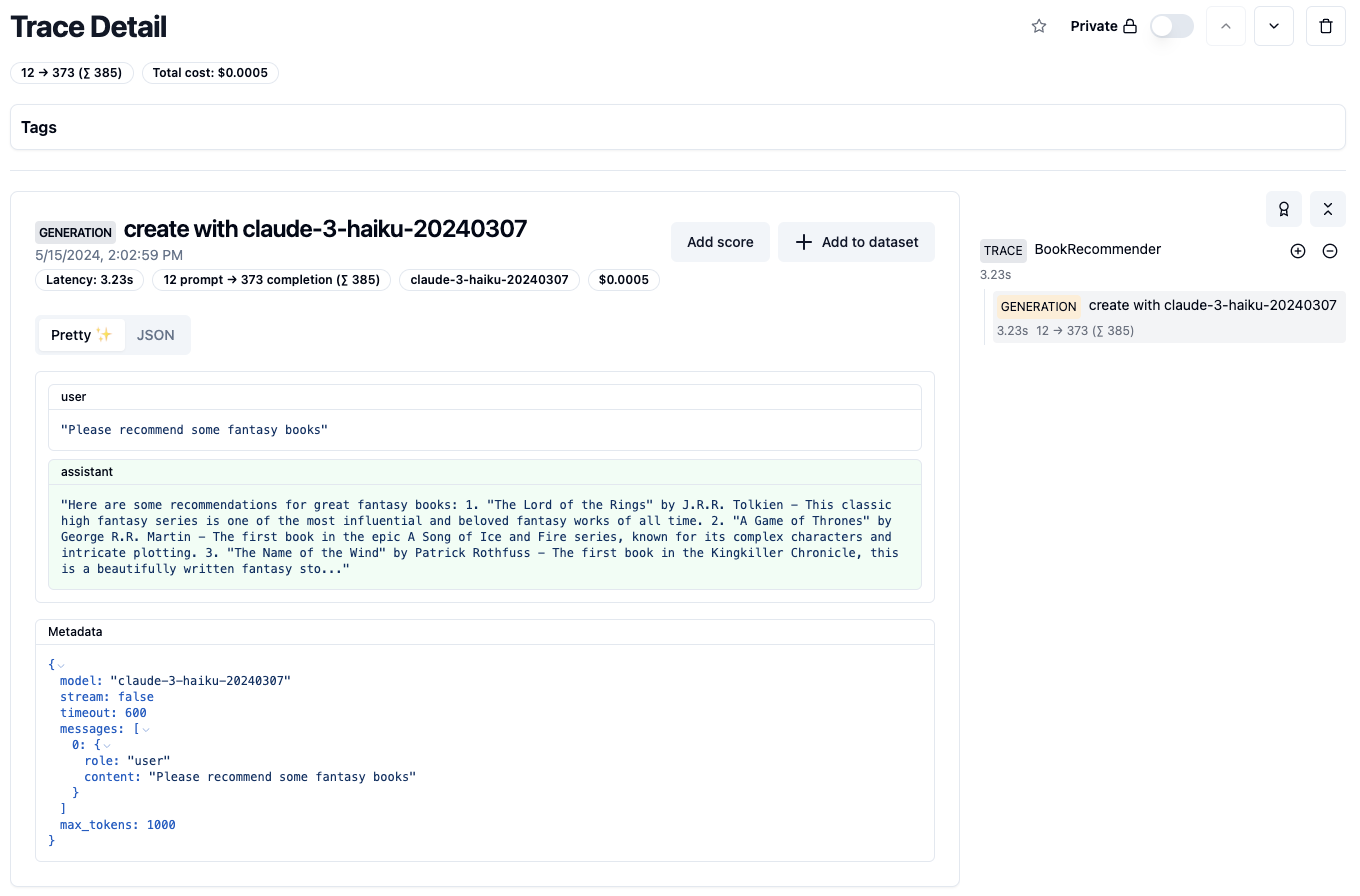

Mirascope integrates with Langfuse with a decorator @with_langfuse. This gives you full control on what you want to observe and what you don’t.

Call

This is a basic call example that will work across all Mirascope call functions such as stream , call_async , and stream_async .

1import os

2from mirascope.langfuse import with_langfuse

3from mirascope.anthropic import AnthropicCall

4

5os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

6os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

7os.environ["LANGFUSE_HOST"] = "https://cloud.langfuse.com"

8

9

10@with_langfuse

11class BookRecommender(AnthropicCall):

12 prompt_template = "Please recommend some {genre} books"

13

14 genre: str

15

16

17recommender = BookRecommender(genre="fantasy")

18response = recommender.call() # this will automatically get logged with langfuse

19print(response.content)

And that’s it! Now your Mirascope class methods will be sent to Langfuse traces and calls will be sent to Langfuse generation. Mirascope automatically sends tags, latency, cost, and other metadata alongside inputs and outputs.

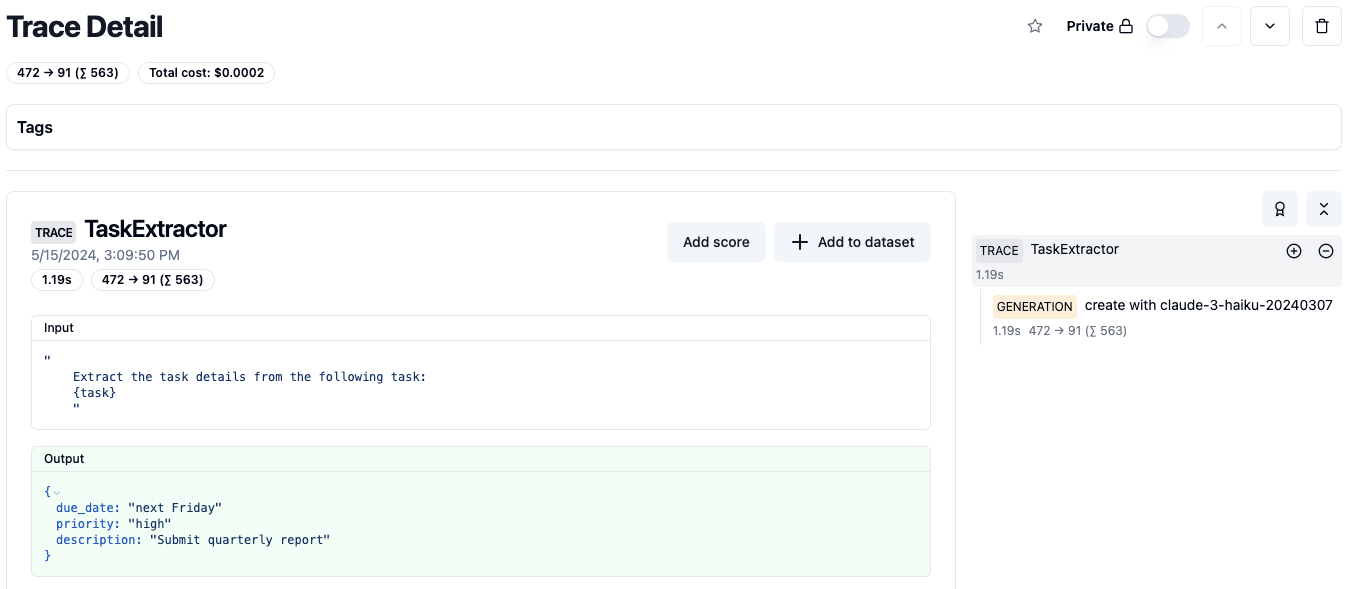

Extract

You can also take advantage of Mirascope extractors and observe those in Langfuse. Extractors allow you to efficiently pull out relevant information from your data. By monitoring these extractors in Langfuse, you can assess the quality of your data and ensure your results are accurate.

1import os

2from typing import Literal, Type

3

4from pydantic import BaseModel

5

6from mirascope.anthropic import AnthropicExtractor

7from mirascope.langfuse import with_langfuse

8

9os.environ["LANGFUSE_PUBLIC_KEY"] = "pk-lf-..."

10os.environ["LANGFUSE_SECRET_KEY"] = "sk-lf-..."

11os.environ["LANGFUSE_HOST"] = "https://cloud.langfuse.com"

12

13

14class TaskDetails(BaseModel):

15 description: str

16 due_date: str

17 priority: Literal["low", "normal", "high"]

18

19

20@with_langfuse

21class TaskExtractor(AnthropicExtractor[TaskDetails]):

22 extract_schema: Type[TaskDetails] = TaskDetails

23 prompt_template = """

24 Extract the task details from the following task:

25 {task}

26 """

27

28 task: str

29

30

31task = "Submit quarterly report by next Friday. Task is high priority."

32task_details = TaskExtractor(

33 task=task

34).extract() # this will be logged automatically with langfuse

35assert isinstance(task_details, TaskDetails)

36print(task_details)

Head over to Langfuse and you can view your results!